Engineering

When building AI chat is actually hard (how and why we built our agents)

Anh-Tho Chuong • 6 min read

Jul 15, 2024

/9 min read

Pricing AI products is tough.

That is, all AI products. Model vendors (e.g. Mistral, OpenAI), hardware vendors (e.g. Groq, Nvidia), and services that proxy AI for users (e.g. Intercom).

Why? Because pricing AI is a unit-based problem. AI models are expensive to run. They demand real compute and GPU power. To abstract AI to a basic monthly subscription scheme is dangerous; it can easily run unprofitable.

We’ve seen a lot of AI pricing struggles through Lago. Notably, we’ve noticed that it breaks down into four questions: (a) units, (b) tiers, (c) terms, and (d) implementation. The starting three are theoretical: how are costs calculated? The final is technical: how is billing executed?

Let’s discuss all of these considerations in detail.

What is the right unit for an AI application? Well, it depends. Units aren’t always straightforward. Options range from user-facing abstractions to bare-metal numbers.

A simple model that is common for APIs is billing things based on requests. 100 requests invoked to an AI model? Great, that’s 100x units.

However, requests are not ideal for AI companies. Unlike APIs, where each request takes a trivial amount of compute, a single AI model request might be far more expensive than another one.

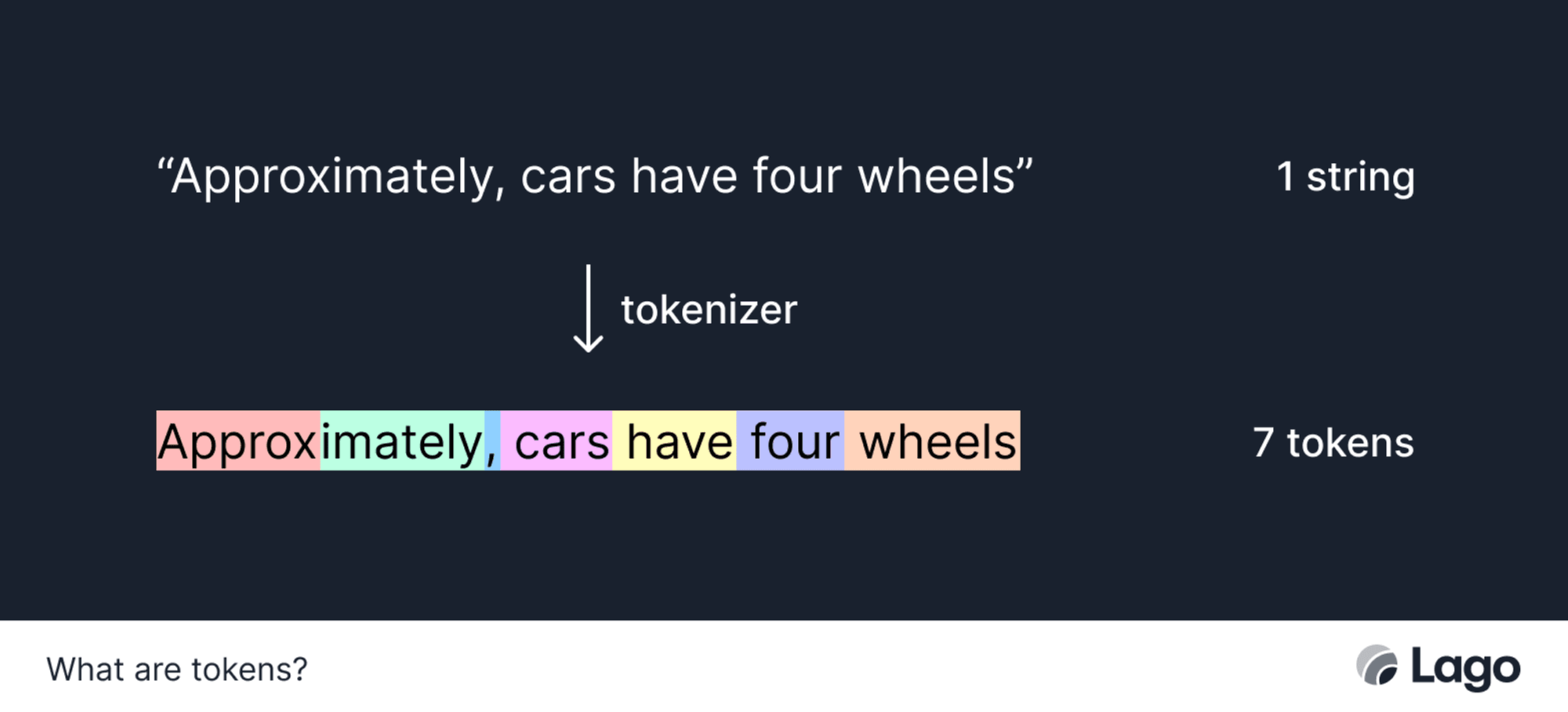

Tokens is the response to the pitfalls of requests. Tokenization is the process of breaking text into subword units. Each of these units is a token. Tokens recognizes the difference between processing a sentence versus an entire book.

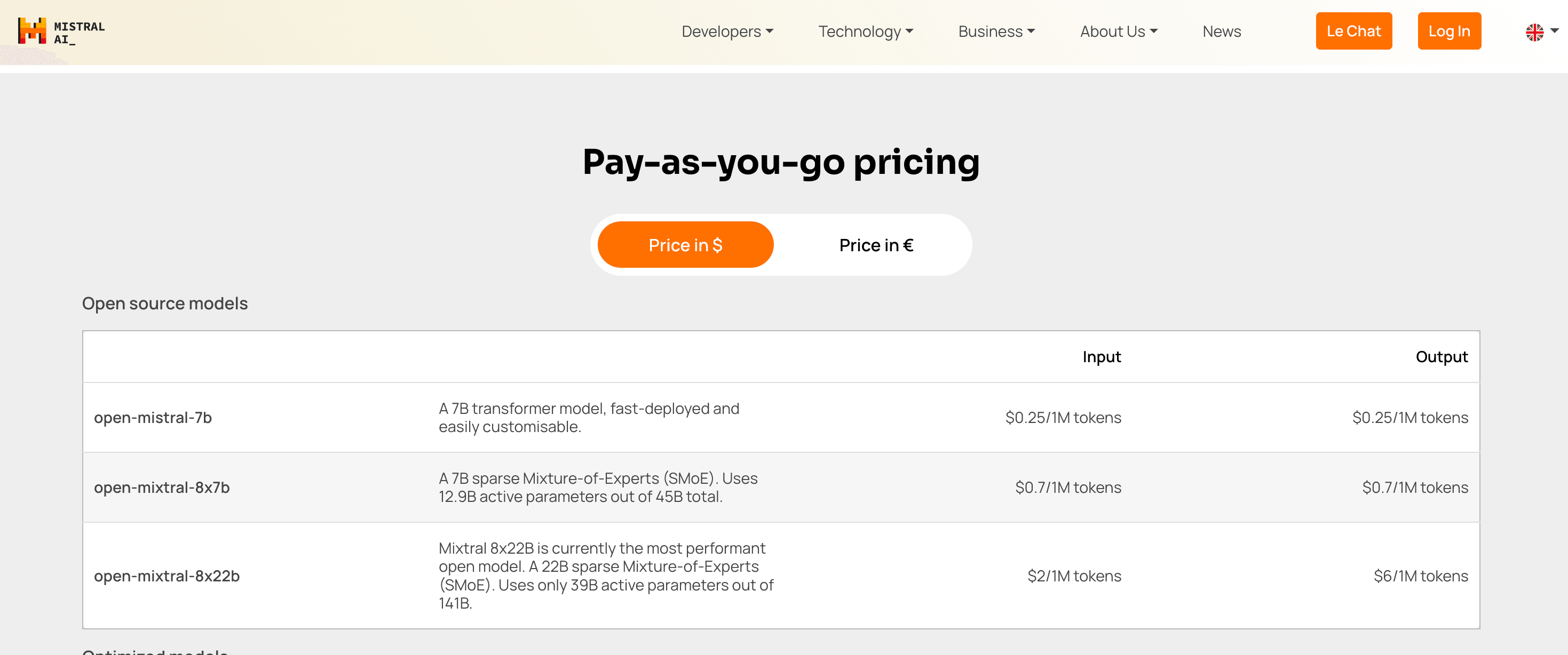

By pricing tokens, AI companies—particularly AI model vendors—can better approximate compute power needed with a user-facing abstraction. This is the chosen model for Mistral AI, which prices millions of tokens used per model.

Tokens can both measure the size of an input and the size of the output. Sometimes, these are incredibly different. The query “Write me a ten-page story about corn harvesting” has tiny amount of input tokens yet a relatively massive amount of output tokens.

Accordingly, [Mistral.ai](http://Mistral.ai) separates pricing for both:

You’ll notice that the pricing is also split by model type. We’ll discuss that more in the next section, Tiers.

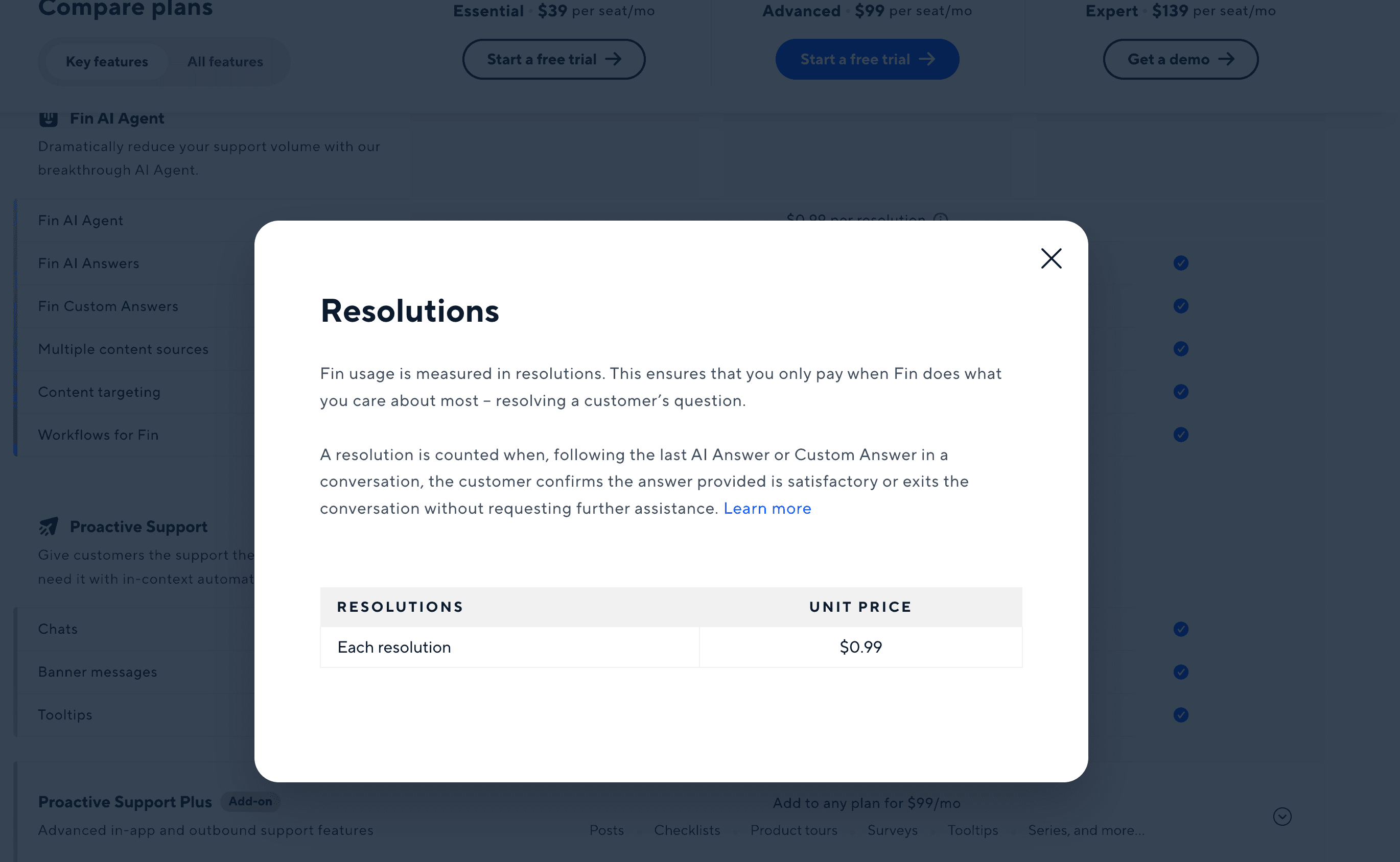

For some companies, metering around underlying model requests isn’t fair to the user. This is particularly true of companies that leverage AI models to provide a specific service to the user. Intercom is a great example; it ships an AI bot named Fin that manages customer interactions.

Instead, these companies need to bill around successes. What constitutes a success depends on the product. For Intercom, it’s resolutions of customer questions:

Example of Intercom’s new AI features pricing and packaging

Technically, this model does allow for infinite model interactions under the hood. For example, what if an Intercom end-user was being really, really difficult? However, by pricing a success well above the average costs needed—alongside rate-limiting model usage—products can profitably leverage successes as a unit.

For the customer, this model is also easy math. If the product saves an average of $X per successful outcome, and if the product costs $Y per outcome, then it’s just subtraction. $X - $Y. The end.

Physical pricing is an even more raw form of pricing. This only applies to infrastructure companies like Nvidia, that charge per CPU socket. This may be a flat cost if the hardware is dedicated to the user; alternatively, it may be metered in hours if the hardware is dynamically allocated to user work.

Units are only the first half of pricing. After units, there is tiers.

When it comes to AI pricing, there are primarily two types of tiering: model tiering and subscription tiering.

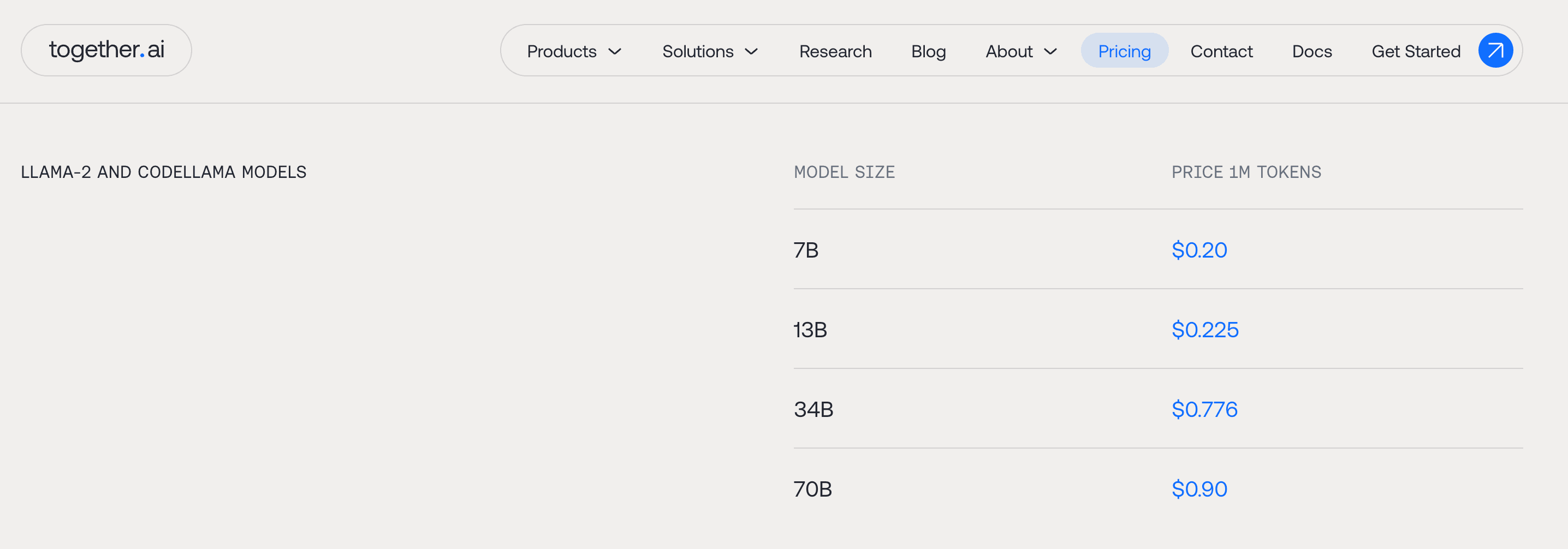

For companies like Mistral or [Together.ai](http://Together.ai), pricing based on tokens requires model tiers. Some models are more expensive to run than others. As a result, token rates need to be adjusted accordingly.

Together.ai, for instance, offers a 4x spread on token rate depending on the model size, spanning from $0.20 / 1M tokens to $0.90 / 1M tokens.

Some customers might use multiple models. Accordingly, their billing should have multiple line-items, each with a different token rate.

Like many SaaS applications, AI applications often break pricing into monthly or yearly subscription tiers. More expensive tiers may unlock more advanced features; they also may unlock cheaper metered rates given the monthly price floor.

This model is particularly popular for companies that provide a chat interface access AI models. Because the interface has value itself, it’s billed as a subscription tier price atop the unit rates.

A great example of this is Hugging Face, which has different subscription tiers like Pro, Enterprise, and Hub:

Arguably, some companies, like OpenAI, strictly have a subscription rate (and no metered pricing) for user-facing products like ChatGPT. However, these products don’t have usage-based pricing because they have a strict rate limit and hard limits on message length.

Yes. A company could hypothetically include both subscription tiers and model-based pricing. However, if the subscription tiers also alters the model-based pricing rate, that’ll likely confuse users. Part of pricing is communicating value. It’s critical for that value messaging to not be diluted by unnecessary complexity.

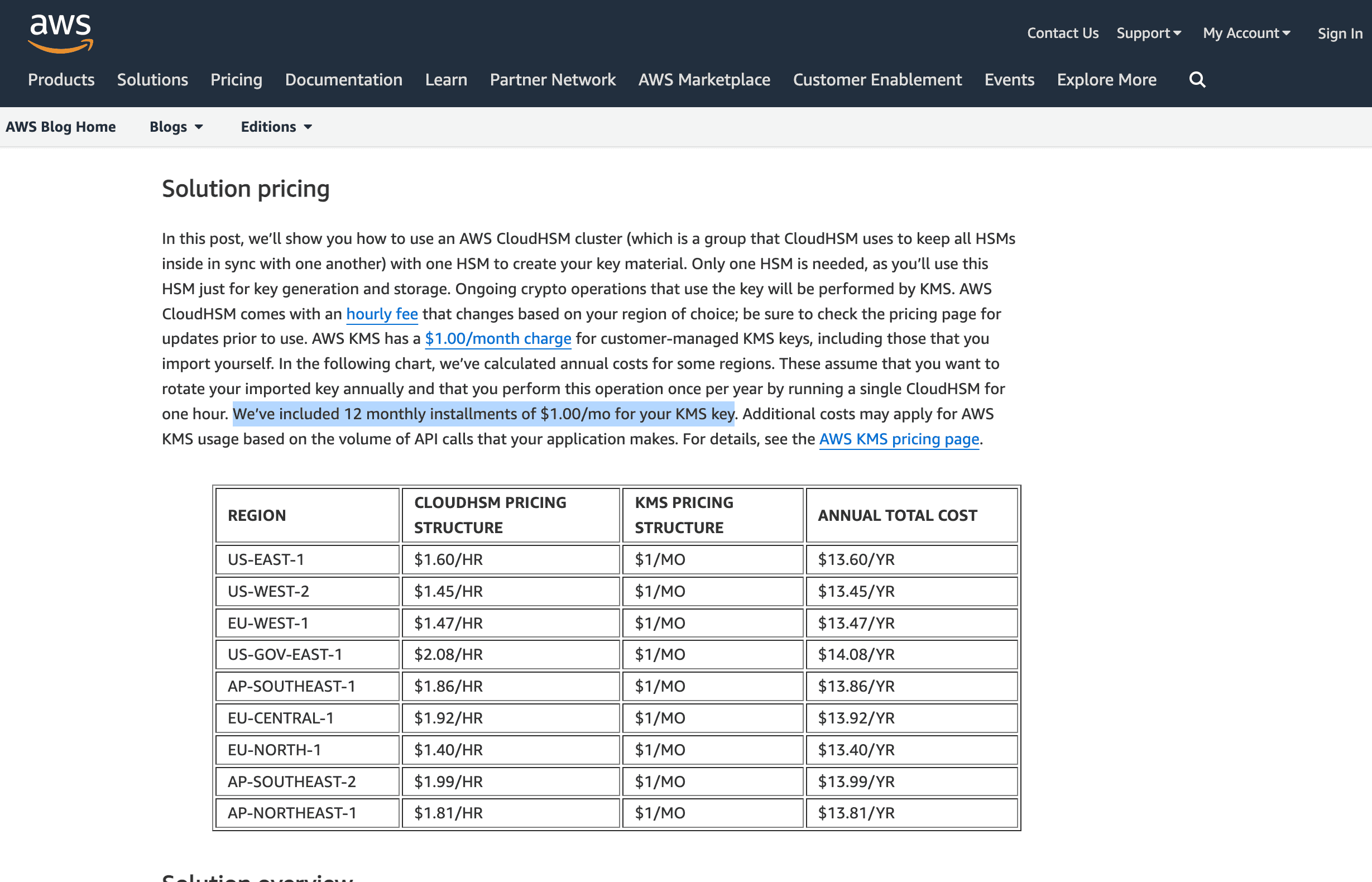

Another element that is arguably a separate tier is BYOK pricing. BYOK—or Bring Your Own Key—is a security feature that enables customers to control encryption keys while using specific services. This often spurs a separate pricing tier for personalized key installment.

BYOK is available in enterprise-targeting products like Amazon.

While units and tiers define the base cost model, there are additionally terms to consider. For instance, when are customers billed? How does a company safeguard against taking a loss due to a delinquent customer? How does enterprise billing work?

These edge case questions are as big of a deal as units and tiers.

For many SaaS applications, if a user doesn’t pay, it’s not a big deal. Sure, that implies that they are churning, which translates to lost revenue, but the cost of the service was likely minimal.

However, with AI, this isn’t exactly the case. AI is expensive. If a user racks up a massive AI bill and doesn’t pay, then the entire customer account may be unprofitable to the business.

Thankfully, there are a few pricing models that mitigate this risk.

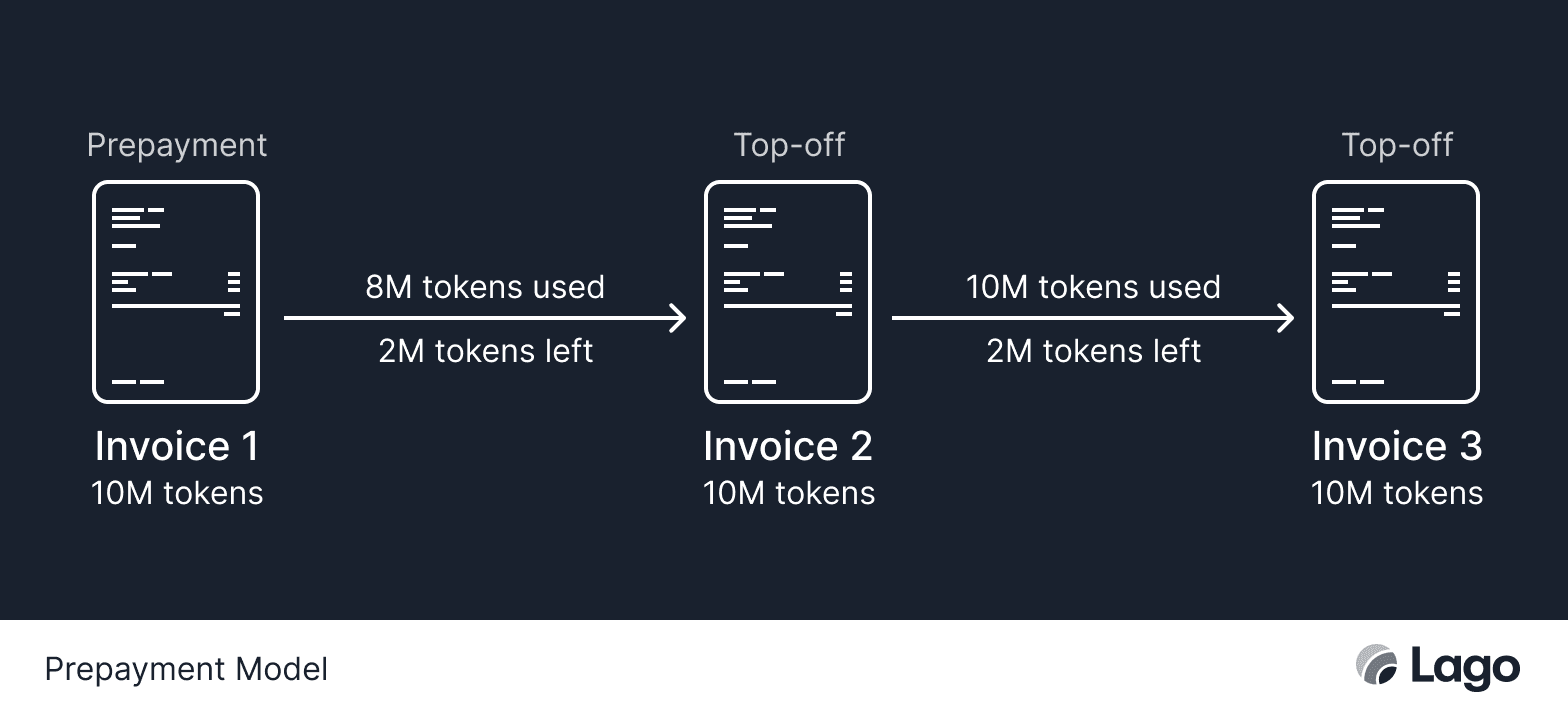

The obvious solution to a customer not paying is to request prepayment. If users pre-purchased tokens (or whatever unit) in advance, there is no risk of a lack of payment. The credits are just consumed over time.

This creates a new challenge, however. Customer accounts cannot just grind to a halt if credits are exhausted. That would be punishing the best users. Rather, companies need to automatically top-up credits whenever they run dry or tolerate some level of negative balances.

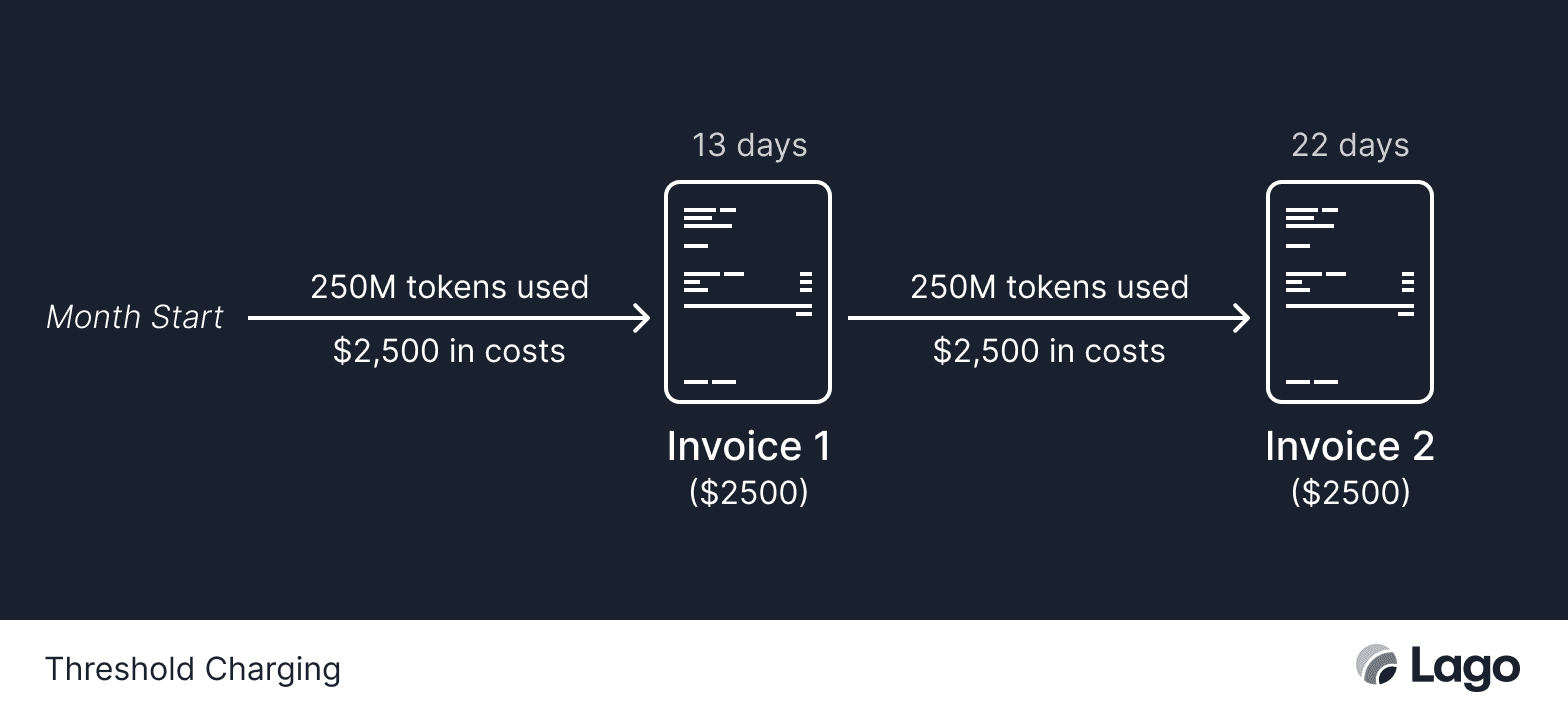

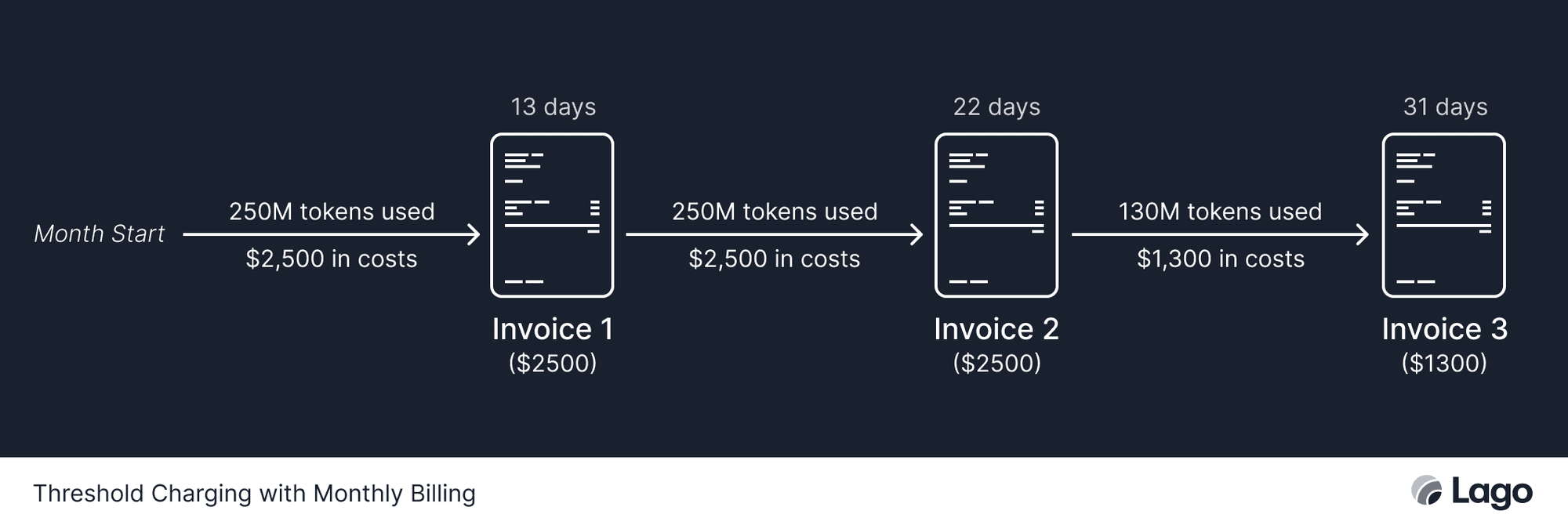

The other solution is to bill customers whenever usage crosses a reasonable threshold. For instance, if a company can tolerate a $5,000 loss, then they can bill the client whenever they reach $2,500 in costs. If they reach another $2,500 tranche without paying the last invoice, then service can be cut-off. This is particularly reasonable if the payment method is set to auto-pay.

While this does introduce some risk, it may be preferred over prepayment if there are concerns that prepayment will negatively impact sales. It also transparently provides the customer with a reminder of their spending rate.

There is a complication with this strategy, however. Some customers may reach the billing threshold really slowly. Hypothetically, some might never reach it. To address this, customers should still be billed at a monthly (or yearly) cadence. That way, their balance is wiped out, restarting the cycle.

Sometimes, metered pricing may also have spending minimums. This is independent of a subscription tier—the minimum is applied to units (e.g. tokens) used. For example, if the minimum is 5M tokens / month, and the user only used 3M tokens last month, then they’ll still be charged for the full 5M.

Spending minimums might often equal one base unit. For instance, if the rate is $5.00 / 1M tokens, and 1M tokens was the minimum, then any value below a single base unit (e.g. 800K tokens) would round up.

AI companies often offer enterprise contracts with negotiated prices. This rises the need to tailor prices, thresholds, and packages for each individual enterprise customer.

This personalization can sometimes be expensive; maintaining unique pricing for each contract naturally leads to a bigger administrative overhead. It also mandates for a more flexible billing system to account for the variations.

Taxes always add a layer of complexity to billing. They are particularly more complex for AI companies.

AI tokens are a brand new tax item, which isn’t supported by many tax providers. AI companies also sometimes mix of a physical products (racks and chips) with software products (tokens, models, and computers).

The final layer of complexity is that AI companies often sell to consumer and corporate customers worldwide, each category with its own tax implications.

There remains one last question—how do you implement units, tiers, and terms? For starters, usage-based billing is complex. With AI’s heavy costs, it’s high stakes for nailing the right billing scheme.

Metering AI is not an easy thing because of volume. For many applications, AI is measured in millions of tokens. Some endpoints might leverage billions of tokens per month.

With multiple users and multiple AI models spanning various modalities (text, images, voices, and training), the complications of executing billing only grows.

There are primarily two strategies for tracking AI usage, both with pros and cons.

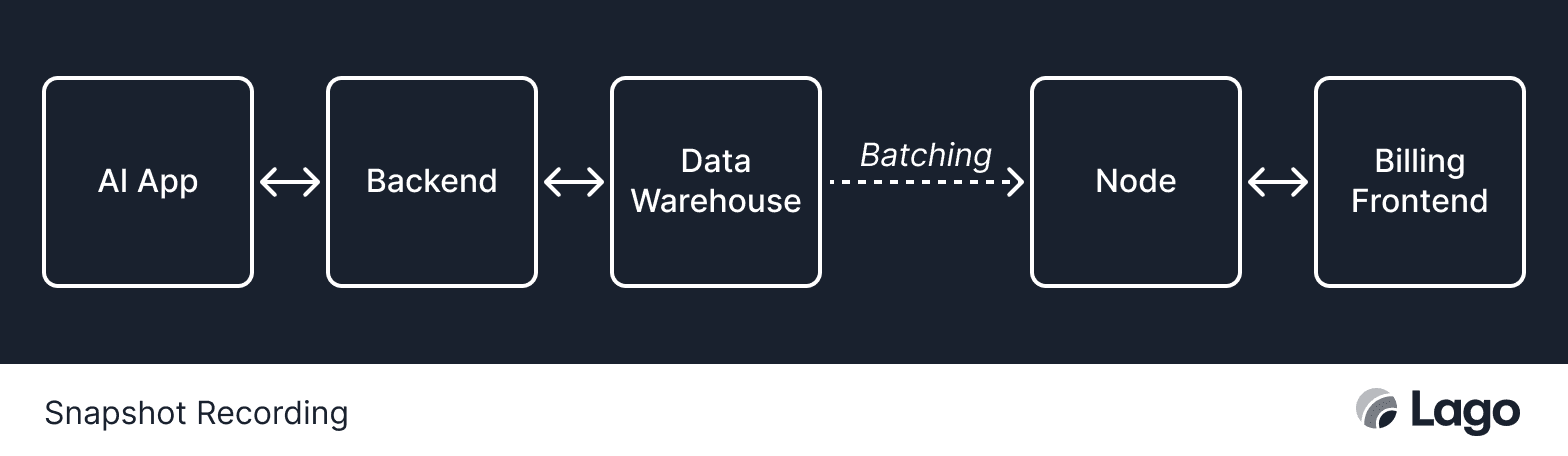

A common technique for recording usage is by using snapshots. Snapshots aggregate data within a set sub-interval that is much smaller than the billing cycle. Common sub-intervals are hours or days.

Snapshot recording is not as granular to the end user—it puts things into buckets without revealing the exact timestamps of each bucket entry. However, it is easier to maintain and is far more cost effective. There is simply an external job that calculates usage in the background, reporting it to the user whenever available.

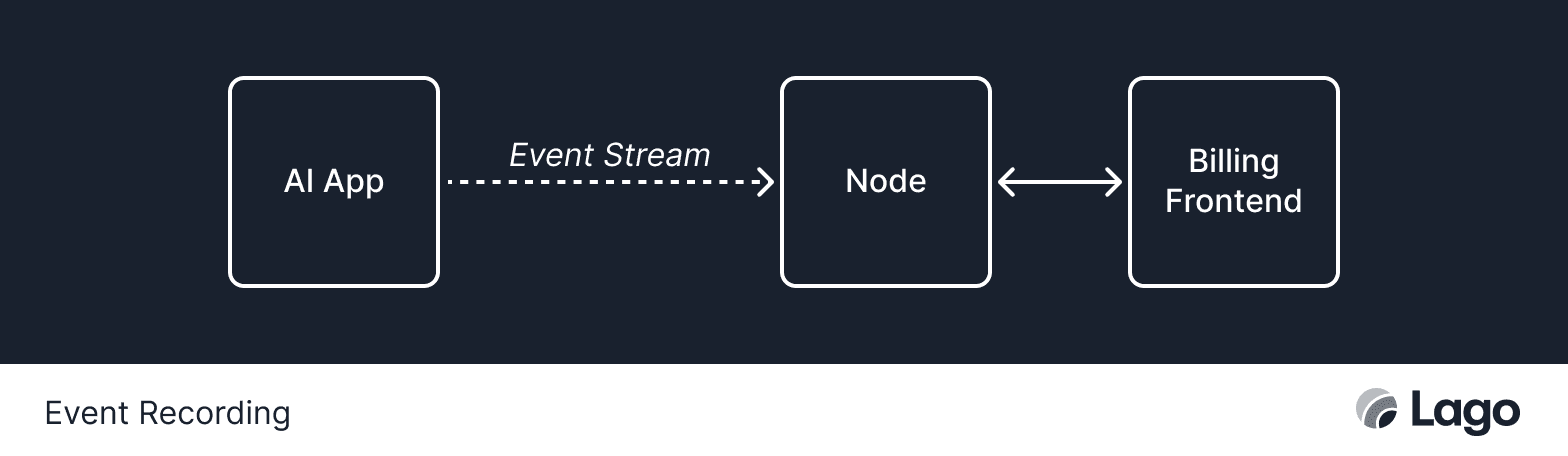

Alternatively, companies can use event recording. Event recording means dispatching the number of units (e.g. tokens) after every request to the node. That node shares the events to the end user’s frontend.

Event recording may be appropriate whenever usage is relatively low. But it becomes extremely expensive on compute for both the backend and frontend whenever usage grows past the hundreds of thousands.

Finance teams have to pay special attention to compute costs given how expensive they can be when running AI models. This includes carefully managing pricing strategies to align with operational expenses and running the models.

Pricing is hard. AI has made pricing harder. It’s a necessary battle to create a winning and profitable product.

My biggest piece of advice is to not dismiss the challenge of creating a good pricing engine. The most common mistake I’ve seen is companies trying to fix their pricing woes via bandage solutions stacked atop each other. It makes for a clunky codebase and spurns billing errors.

Instead, use a proper framework and scope out all the edge cases for billing at the start. If you need to alter billing strategies in the future, you can. What you don’t want is to constantly be tripping over billing blunders and risking ever going negative with a customer.