OpenAI's per-token pricing

Discover how OpenAI, the market leader in generative AI, makes its offering more flexible with its per-token pricing based on dimensions.

What's in it for you?

In this article, you will learn how to build a billing system based on tokens.

This template is suitable for Large Language Model (LLM) and Generative AI companies whose pricing can vary based on the application or model used.

What's the secret sauce?

For OpenAI, pricing depends on the language model used. Here are several price points they offer:

"Prices are per 1,000 tokens. You can think of tokens as pieces of words, where 1,000 tokens is about 750 words (learn more here)."

1 - GPT-3.5 Turbo

2 - GPT-4

Here’s how you can replicate this pricing and billing system with Lago.

What do you need?

The first thing to do is to create your company account on Lago Cloud or deploy Lago Open Source on your existing infrastructure. In both cases, you should ask a back-end developer to help you with the setup.

Our documentation includes a step-by-step guide on how to get started with our solution.

Instruction manual

Step 1 – How to create metric groups

Lago monitors usage by converting events into billable metrics. To illustrate how this works, we are going to take GPT-4 as an example.

OpenAI’s GPT-4 pricing includes a single metric based on the total number of tokens processed on the platform.

To create the corresponding metric, we use the ‘sum’ aggregation type, which will allow us to record usage and calculate the total number of token used. In this case, the aggregation type is metered. This means that usage is reset to 0 at the beginning of the next billing cycle.

For this metric, there are two dimensions that will impact the price of the token:

- Model: 8K context or 32K context; and

- Type: Input data or Output data.

Therefore, we should add these two dimensions to our billable metric. This will allow us to group events according to the model and type.

At the end of the billing period, Lago will automatically bill customers for the total token usage of each model and type.

We can apply the same method to create billable metrics for GPT-3.5 Turbo.

Step 2 – How to set up a per token pricing

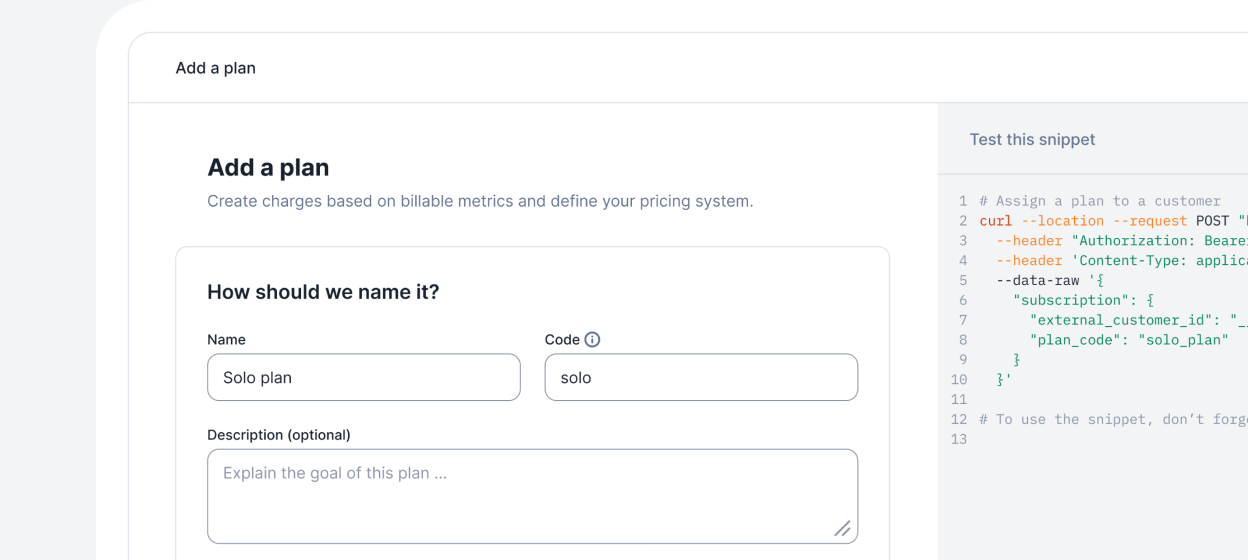

When creating a new plan, the first step is to define the plan model, including billing frequency and subscription fee. OpenAI pricing is ‘pay-as-you-go’, which means that there’s no subscription fee (i.e. customers only pay for what they use).

Here is how to set the monthly plan for GPT-4.

Our plan includes the ‘per 1,000 tokens’ charge, for which we choose the package pricing model. As we have defined 2 dimensions, we can set a specific price for each Model/Type combination.

We can apply the same method to create plans for GPT-3.5 Turbo.

Our plan is ready to be used, now let’s see how Lago handles billing.

Step 3 – How to monitor usage

OpenAi records the token usage, the number of images, and the usage of transcribing speech into text. These activities are converted into events that are pushed to Lago.

Let’s take GPT-4 as an example:

Lago will group events according to:

- The billable metric code;

- The model; and

- The type.

For each charge, the billing system will then automatically calculate the total token usage and corresponding price. This breakdown will be displayed in the ‘Usage’ tab of the user interface and on the invoice sent to the customer.

Wrap-up

Per-token pricing offers flexibility and visibility, and allows LLM and Generative AI companies like OpenAI to attract more customers.

With Lago, you can create your own metric dimensions to adapt this template to your products and services.

Give it a try, click here to get started!

Focus on building, not billing

Whether you choose premium or host the open-source version, you'll never worry about billing again.

Lago Premium

The optimal solution for teams with control and flexibility.

Lago Open Source

The optimal solution for small projects.